Domain adaptation with low resource is quite a recurring problem for companies. Here is a collection of the tricks I could find to help you do better modeling.

Data augmentation

Use Masked LM to replace with synonyms

Data augmentation can be done with textual similarity if you have a large amount of unlabelled text

GANs and BART are suitable for text augmentation

Prepend label at input to do conditional data augmentation

Removal

Character removal

Word removal

Span removal

Word replacement

wornet

embeddings

custom embeddings

Word expansion and contractions

Swapping

adjacent word

adjacent sentence

Paraphrase via seq2seq and syntactic trees

Back-translation

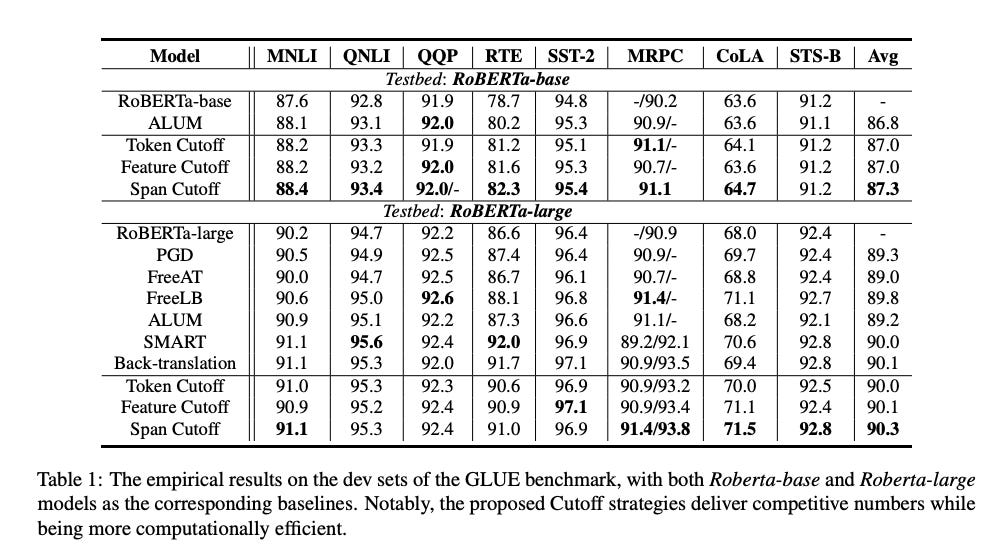

“In this paper, we introduce a set of simple yet efficient data augmentation strategies dubbed cutoff, where part of the information within an input sentence is erased to yield its restricted views (during the fine-tuning stage). Notably, this process relies merely on stochastic sampling and thus adds little computational overhead.”

Use transformers zero-shot pipeline to label data which uses entailment task to find the best label. You can read the working here.*

GPT3

Give a few examples to prime GPT3 API and use it to label more dataset.*

kNN

Use k nearest neighbour to label dataset.*

Weak supervision

Train model with limited data

Make some predictions on data for labelling

Correct those samples which are incorrect and those which are correct but have low prob (hard samples)

Train classifier with new data*

Modifying optimizer/model/training

Revisiting Few-sample BERT Fine-tuning

“ First, we show that the debiasing omission in BERTAdam is the main cause of degenerate models on small datasets commonly observed in previous work.

Second, we observe the top layers of the pre-trained BERT provide a detrimental initialization for fine-tuning and delay learning. Simply re-initializing top layers not only speeds up learning but also leads to better model performance.

Third, we demonstrate that the common one-size-fits-all three-epochs practice for BERT fine-tuning is sub-optimal and allocating more training time can stabilize fine-tuning.

Finally, we revisit several methods proposed for stabilizing BERT fine-tuning and observe that their positive effects are reduced with the debiased ADAM.”

On the Stability of Fine-tuning BERT

“Use small learning rates combined with bias correction to avoid vanishing gradients early in training.

Increase the number of iterations considerably and train to (almost) zero training loss while making use of early stopping.”

Contrastive learning

SimCLRv2 works great with low resource image classification. Similarly, it can also help in low resource NLP.

Supervised Contrastive Learning For Pre-trained Language Model Fine-tuning

“We obtain strong improvements on few-shot learning settings (20, 100, 1000 labeled examples), leading up to 10.7 points improvement for 20 labeled examples.

Models trained with SCL are not only robust to the noise in the training data, but also generalize better to related tasks with limited labeled data.”

Custom architecture

Combining BERT with Static Word Embeddings for Categorizing Social Media

Since subword models can capture more info and are robust to OOV, fasttext can also be used above instead of GLoVE. Adding features from multilingual transformer models can also help but can get compute heavy for inference.

Bootstrap embeddings

Conclusion

There are a lot of techniques to get better results in a low resource setting. Choose those with high impact and low complexity. Let me know in the comments if you have a trick.

If you found this useful, do share and let others know.

Noteable work:

*This data will not be perfect and has to be given less sample weight while training or trained with label smoothing.

Come join Maxpool - A Data Science community to discuss real ML problems!