Pain of working with LLM - prompt engineering, fine tuning, data for fine tuning, no interpretability, hallucinations

Goal - Make LLM execute any task by learning from its actions.

Meta-pattern - Imitate human behaviour and abilities - Reflect, plan, memorise

LLMs need to reason in order to create subtasks.

LLMs need to act on the environment to fetch required information.

LLMs need to reflect on the mistakes to avoid making them again.

LLMs need to ask whenever needed to not arrive at the answer too early.

Let’s discuss some of the major breakthroughs to make LLM perform tasks better.

ReAct

Combine the ability of LLM to reason and act with the environment to complete the task with sequences of thought→action→observation.

Large language models (LLMs) have been shown to be effective in a wide range of natural language processing tasks, but they also have some limitations. One of the main challenges with LLMs is that they can be difficult to interpret and understand, which can make it hard to trust their outputs or identify potential errors. ReAct addresses this challenge by generating both a plan for how to perform a task and an explanation of why it's performing the task in that way. This helps make the reasoning process more transparent and interpretable, which can improve human trust in the model's outputs. Another challenge with LLMs is that they may struggle with tasks that require both reasoning and acting, such as decision making or problem solving. ReAct addresses this challenge by interleaving reasoning and action generation during the training process, which allows the model to adapt its actions based on new information or unexpected inputs. Overall, ReAct has been shown to improve the interpretability and trustworthiness of LLMs while also improving their performance on tasks that require both reasoning and acting.

ReAct is a way to teach computers to do things that require both thinking and doing, like answering questions or making decisions. It works by having the computer generate both a plan for what it should do and an explanation of why it's doing it. This helps the computer adapt its plan if something unexpected happens.

For example, imagine you're trying to teach a robot how to make a sandwich. ReAct would help the robot come up with a plan for making the sandwich, like getting bread and putting peanut butter on it. But it would also explain why it's doing those things, like "I'm getting bread because I need something to put the peanut butter on." If something unexpected happens, like there's no bread left, ReAct would help the robot come up with a new plan based on that explanation.

Prompting has limited context window and learning support. Initial finetuning results on HotpotQA using ReAct prompting trajectories suggest: (1) ReAct is the best fintuning format across model sizes; (2) ReAct finetuned smaller models outperform prompted larger models!1

Self-Refine

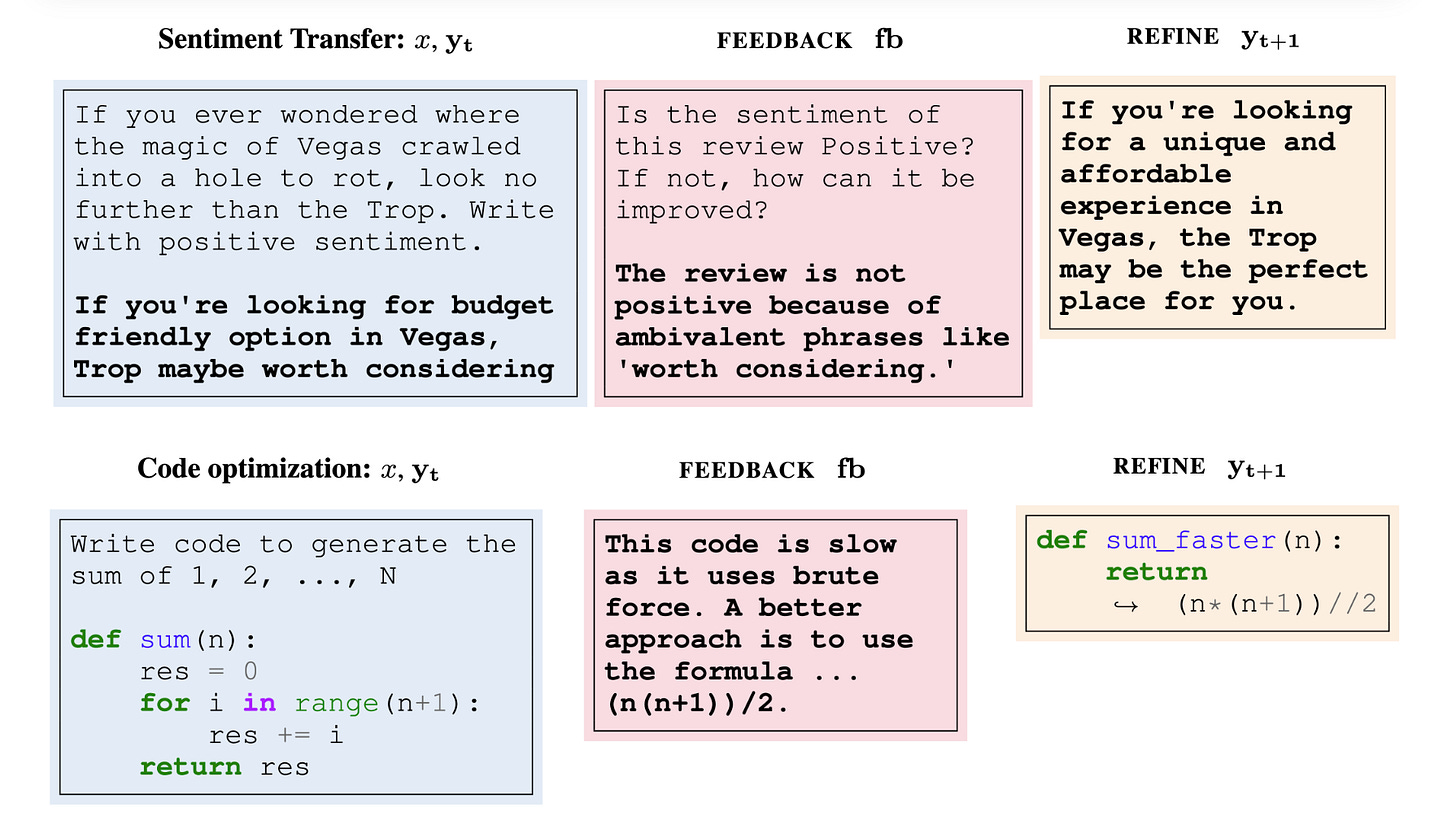

Keep refining output by getting feedback till the acceptance condition is reached.

Sometimes the text generated isn't very good because the task is too complicated or doesn't have clear goals. To make the text better, we need to give the program feedback and let it try again. That's what the SELF-REFINE framework does - it helps the LLM improve its own text by giving it feedback and letting it try again.

Let's say we want the LLM to generate a positive review of a restaurant. The LLM might generate a sentence like "The food was good." But that's not a very interesting or detailed review. So, we can use the SELF-REFINE framework to give the LLM feedback on its output and let it try again. For example, we might tell the LLM that we want the review to be more colorful and use extreme phrases like "the food was amazing" or "I would eat here every day if I could." The LLM can then generate a new sentence based on this feedback, and we can continue this process until we get a review that meets our desired criteria.

def self_refine(prompt: str) -> str:

def is_refinement_sufficient(prompt, feedback, initial, refined) -> bool:

# Define stopping criteria here

pass

answer = ChatGPT(prompt)

while True:

feedback = ChatGPT(feedback_prompt, answer)

refined = ChatGPT(refiner_prompt, feedback, answer)

if is_refinement_sufficient(prompt, feedback, answer, refined):

break

answer = refined

return refined

# source - selfrefine.infoThe SELF-REFINE framework works by allowing an LLM to generate an output, then using the same model to provide feedback on its own output. The model then refines its previously generated output based on its own feedback. This process is repeated iteratively until the desired quality of output is achieved.

SELF-REFINE has potential applications in a variety of fields related to natural language processing and artificial intelligence. For example, it can be used to improve the quality of generated text in chatbots, virtual assistants, and other conversational agents. It can also be used to generate more accurate and specific responses in question-answering systems. In addition, SELF-REFINE can be applied to tasks such as summarization, translation, and sentiment analysis to improve the quality of generated outputs.

Toolformer

Augment LLM capabilities by making it capable to select & use the tool for the job.

One of the main problems with today's language models (LLMs) is that they lack the ability to use external tools such as search engines, calculators, or calendars. Existing approaches either rely on large amounts of human annotations or limit tool use to task-specific settings only, hindering a more widespread adoption of tool use in LMs.

Toolformer decides which APIs to call and when to call them by training a model in a self-supervised way. The model is fine-tuned on a large number of sampled API calls that are filtered based on whether they reduce perplexity on future tokens. In other words, Toolformer learns to use different tools such as search engines, calculators, and translation systems via simple API calls. The model autonomously decides which APIs to call based on the context of the text it is generating and what information it needs to complete the text.

Toolformer solves this problem by training a model to decide which APIs to call, when to call them, what arguments to pass, and how to best incorporate the results into future token prediction. This is done in a self-supervised way, requiring nothing more than a handful of demonstrations for each API. Toolformer incorporates a range of tools, including a calculator, a Q&A system, a search engine, a translation system, and a calendar. Toolformer achieves substantially improved zero-shot performance across a variety of downstream tasks without sacrificing its core language modeling abilities.

HuggingGPT

LLM selects task specific action models from huggingface hub to complete the task.

The philosophy behind HuggingGPT is to use large language models (LLMs) as a controller to manage AI models and solve complex tasks. HuggingGPT is a collaborative system that consists of an LLM as the controller and numerous expert models as collaborative executors. The workflow of HuggingGPT consists of four stages: task planning, model selection, task execution, and response generation.

Here's how it works:

1. Task planning: HuggingGPT dissects the intent of users and decomposes the task into multiple sub-tasks.

2. Model selection: Based on expert model descriptions, HuggingGPT assigns the most suitable models for each sub-task.

3. Task execution: HuggingGPT integrates results from different models to execute each sub-task.

4. Response generation: Finally, HuggingGPT generates a response to the user based on the results obtained from executing all sub-tasks.

By leveraging the strengths of LLMs in understanding and reasoning, and combining them with external expert models, HuggingGPT can effectively solve various forms of complex tasks with language as the interface to connect LLMs with AI models.

Self Ask

Generate follow-up questions to answer multi-hop questions. Follow up questions can have their own follow-up questions.

The compositional gap is a ratio that measures how often language models can correctly answer all sub-problems but not generate the overall solution in compositional reasoning tasks. It is defined as the fraction of compositional questions that the model answers incorrectly out of all the compositional questions for which the model answers the sub-questions correctly. This ratio is used to evaluate the ability of language models to perform compositional reasoning tasks where the overall solution depends on correctly composing the answers to sub-problems.

As the size of the GPT-3 family of models increases, their single-hop question answering performance improves faster than their multi-hop performance does. This suggests that while more powerful models memorize and recall more factual knowledge, they show no corresponding improvement in their ability to perform compositional reasoning. Therefore, there is a compositionality gap between the two types of questions.

The potential applications of narrowing the compositional gap in language models are numerous. For example, it could improve the performance of natural language processing systems in tasks such as question answering, dialogue systems, and machine translation. It could also lead to more accurate and efficient information retrieval from large datasets. Additionally, it could help in developing more advanced AI systems that can reason and understand complex concepts like humans do.

Self-Ask is a method that improves the performance of language models in answering complex questions by decomposing them into easier sub-questions that the model answers before answering the main question. This method builds on chain of thought prompting, but instead of outputting a continuous chain-of-thought, it has the model explicitly state the next follow-up question it wants to ask before answering it. In addition, Self-Ask inserts scaffolds like "Follow up:", which improves the ability to output the correct final answer in an easily parseable way. This makes it easy to integrate Self-Ask with an internet search engine to answer follow-up questions, which further improves performance.

Reflexion

Generate reflections on the path which led to mistakes. Use the history of paths and reflections as memory to execute in the future.

Reflexion's self-reflection process differs from traditional decision-making agents in that it allows the agent to learn from its own mistakes through a process of trial and error. This is achieved by endowing the agent with dynamic memory and self-reflection capabilities, which enhance its existing reasoning trace and task-specific action choice abilities. The agent is also equipped with a simple heuristic for detecting hallucination and inefficient action execution.

By pinpointing these instances, avoiding repetition in action sequences, and constructing an internal memory map of the given environment, Reflexion can improve its performance in decision-making and knowledge-intensive tasks. The implications of this are that we have another tool beyond “prefix prompt engineering” and “fine-tuning” to get LLMs to do what we want.2

How to evaluate LLM with tools?

API-Bank includes 53 commonly used API tools that can help LLMs improve their contextual processing abilities. These tools cover a wide range of functionalities, such as language translation, image recognition, and sentiment analysis. By integrating these tools into their workflows, LLMs can better understand the context of human instructions and generate more accurate responses. For example, an LLM might use a translation tool to understand instructions in a different language or an image recognition tool to identify objects in a visual scene. The effectiveness of these tools in improving LLM performance is evaluated through experimental analysis in API-Bank.

Peek into APIs implemented in API-Bank:

Tool Search – ToolSearcher

Account Management – RegisterUser – DeleteAccount – ModifyPassword – ForgotPassword – CheckToken – GetUserToken

Information Query and Processing – QueryHistoryToday – SearchEngine – Wiki – Dictionary – ImageCaption – SpeechRecognition – Translate – DocumentQA – SendEmail – Calculator

Health Management – QueryHealthData – SymptomSearch – EmergencyKnowledge – AppointmentRegistration – CancelRegistration – ModifyRegistration – QueryRegistration – RecordHealthData • Entertainment – PlayMusic

Travel – BookHotel

Schedule Management – AddReminder – DeleteReminder – ModifyReminder – QueryReminder – AddMeeting – DeleteMeeting – ModifyMeeting – QueryMeeting – AddAgenda – DeleteAgenda – ModifyAgenda – QueryAgenda – AddAlarm – DeleteAlarm – ModifyAlarm – QueryAlarm – GetToday

Smart Home – AddScene – DeleteScene – ModifyScene – QueryScene – TimedSwitch – CancelTimedSwitch

Finance Management – OpenBankAccount – QueryStock – QueryBalance

Parting thoughts

Reason + Act + Memory + Reflect + Ask + what next?

Come join Maxpool - A Data Science community to discuss real ML problems!

Connect with me on Medium, Twitter & LinkedIn.

https://react-lm.github.io

https://evjang.com/2023/03/26/self-reflection.html