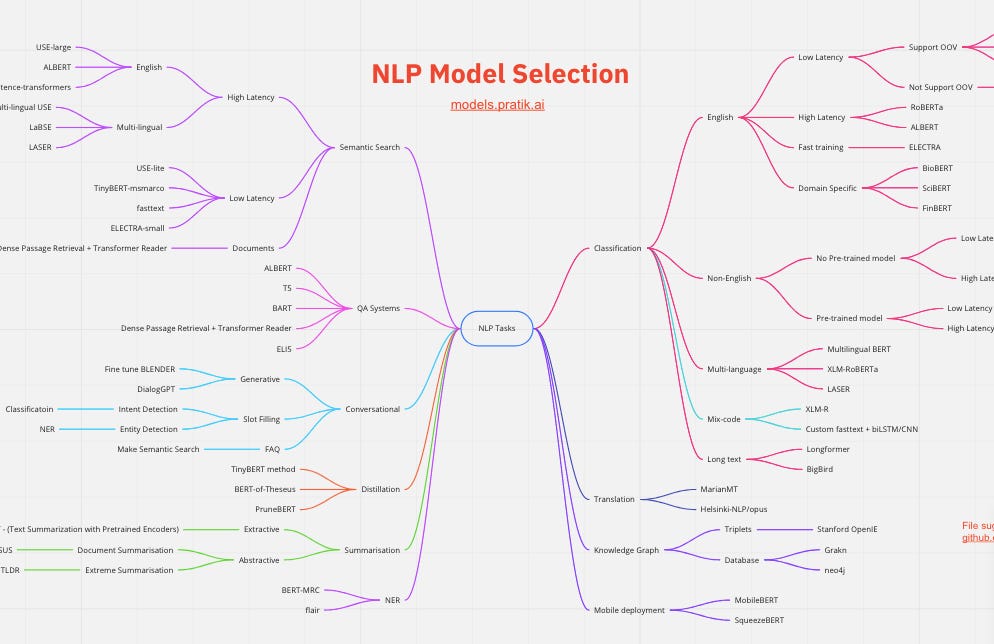

NLP Model Selection v1.1

Making model selection easy

A lot has happened in NLP from the time I released models.pratik.ai.

I received quite positive feedback and have decided to keep it updated.

Here's what got added recently 👻

Models suitable for mobile

MobileBERT (5.3x faster than BERT)

SqueezeBERT (4.3x faster than BERT)

Translation

Helsinki-NLP/opus

Long text (>512)

BigBird (Linear compute with sparse attention)

Semantic Search

LaBSE (93 language support)

LASER (103 language support)

Dense Passage Retrieval + Transformer Reader

Summarisation

PEGASUS (SOTA on summarisation)

Domain models

Replaced BioBERT with ouBioBERT as it supersedes it for medical tasks

Have suggestions? Create an issue @ github.com/bhavsarpratik/nlp-models

BONUS!

This paper finds that if we remove the last 6 layers of BERT, we still get the same performance. No need for fancy distillation. Both are of the same size 66M. This clearly means we love over-engineering. We over-engineered BERT. Then we over-engineered ways to compress BERT 🤫

Come join Maxpool - A Data Science community to discuss real ML problems!

Ask me anything on ama.pratik.ai 👻

You can try ask.pratik.ai for any study material.

Let me know your suggestions via feedback.pratik.ai 😃