LLM Chronicles #7: How To Evaluate LLMs? | Open LLM Leaderboard

Let's explore the internals of automated LLM evaluation

Update: We have restarted the WhatsApp group of Maxpool. We plan to discuss more of LLM and vector search. You can join with this link - join.maxpool.ai.

The emergence of LLMs has sparked intense debates regarding their practical value. With high expectations for these language models, we envision them as potential stepping stones toward achieving AGI. This ambitious outlook is mirrored in the benchmarks designed for assessing their capabilities, aiming for multi-task functionality. In my upcoming series of posts, I intend to delve into diverse leaderboards made for LLM evaluation.

Why are LLM evals so hard? Even humans would suck at it.

LLMs, at their core, function as models for generating the next token in a sequence. This sequence can encompass various types of content, ranging from different languages and real numbers to emojis and symbols. The generated output can be as simple as a single word, a short phrase, or even an extensive discourse. We leverage these versatile characteristics to assess LLMs' performance.

The evaluation of LLMs involves inspecting their diverse capabilities and identifying any potential limitations. Given the rapid emergence of new models, researchers have devised automated evaluation methods. These methods have given rise to multiple leaderboards from various research groups. Lets understand them more to get a feel of moving towards AGI.

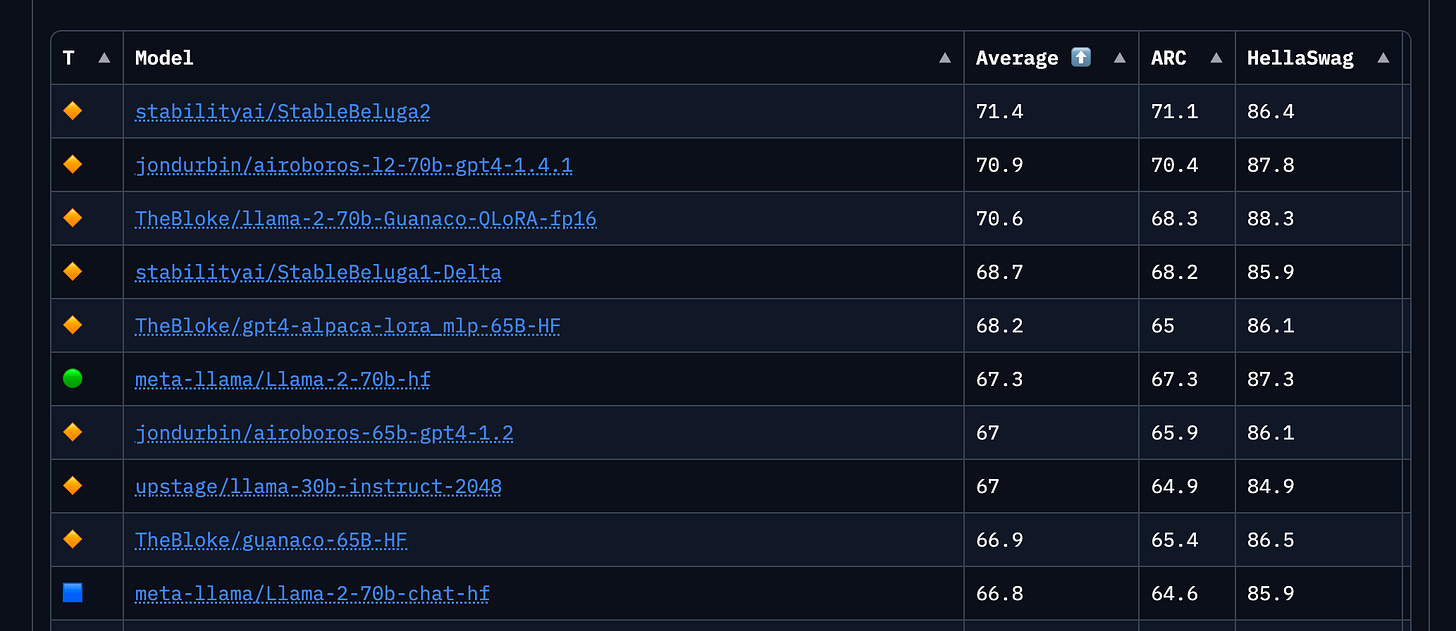

Open LLM Leaderboard is an automated evaluation created by Huggingface. It consists of four tasks ARC, HellaSwag, MMLU, TruthfullQA. These tasks test reasoning, general knowledge & bias across a wide variety of fields in 0-shot and few-shot settings.

AI2 Reasoning Challenge (25-shot)

HellaSwag (10-shot)

MMLU (5-shot)

TruthfulQA (0-shot)

The tasks are multiple choice questions. This allows us to measure the performance of LLMs in terms of accuracy. The leaderboard shows metrics on individual tasks and a final score is calculated as an average of all scores.

Interesting considerations:

To avoid communication-dependent results, only one GPU is used.

LLMs are evaluated on a singleton batch and generating a thousand tokens.

Lets understand a bit more about these tasks.

ARC

Type: reasoning, multiple choice

Description: The authors created the ARC (AI2 Reasoning Challenge) dataset to provide a challenging benchmark for evaluating and advancing the capabilities of AI systems in science reasoning and question-answering tasks.

It consists of 7,787 science exam questions that are gathered from various sources, including licensed science questions provided by a research partner affiliated with AI2 (Allen Institute for Artificial Intelligence). These exam questions are presented in English language text-only format and cover a range of difficulty levels spanning several grade levels.

Each question in the dataset follows a multiple-choice structure, typically presenting four answer options. The dataset is divided into two main subsets:

Challenge Set: This subset contains 2,590 questions that are considered "hard" because both a retrieval method and a co-occurrence method (basic methods used by AI systems) fail to correctly answer them. These questions are specifically designed to be difficult and require more advanced reasoning and inference capabilities beyond simple information retrieval.

Easy Set: This subset comprises 5,197 questions that are relatively easier compared to the questions in the Challenge Set. They are still valuable for evaluating AI systems' performance on standard question-answering tasks.

HellaSwag

Type: reasoning, multiple choice

Description: The dataset was designed to be challenging, with questions that are trivial for humans (achieving over 95% accuracy) but pose significant difficulties for state-of-the-art models (achieving less than 48% accuracy).

To construct this challenging dataset, the authors used a data collection paradigm called Adversarial Filtering (AF). In AF, a series of discriminators are used to iteratively select an adversarial set of machine-generated wrong answers. The length and complexity of the examples in the dataset are scaled up towards a "Goldilocks" zone, where the generated text is ridiculous to humans but is often misclassified by state-of-the-art models.

MMLU

Type: reasoning / instruction following, multiple choice, multi domain

Description: The authors created the Multitask Multilingual Understanding Evaluation (MMLU) dataset to propose a new test that measures a text model's multitask accuracy. The MMLU test covers a wide range of 57 tasks, including topics such as elementary mathematics, US history, computer science, law, and more. The main objective of this dataset is to evaluate how well language models can perform across diverse tasks and domains, reflecting their level of world knowledge and problem-solving abilities.d more.

MMLU test revealed that models had near-random accuracy on socially important subjects such as morality and law. This result raises concerns about the ethical implications of deploying language models with limited understanding of critical social issues.

TruthfullQA

Type: factual, multiple choice

Description: This dataset was created to evaluate how well language models can avoid generating false answers that arise from false beliefs or misconceptions found in human texts. The dataset comprises 817 questions spanning 38 categories, including health, law, finance, and politics.

To create this benchmark, the authors carefully crafted questions that some humans would answer falsely due to holding a false belief or misconception. By doing so, the authors aimed to test whether language models could discern the falsehoods and generate accurate and truthful answers instead of merely imitating the false answers found in human-written texts.

Interestingly, their study found that the largest models were generally the least truthful. This finding contradicts other NLP tasks where performance tends to improve with the size of the model. Given these findings, the authors proposed that simply scaling up language models alone is not the most promising approach for improving truthfulness in question answering.

Parting thoughts…

I found the approach taken here commendable as it thoroughly evaluates the capabilities of the LLM across multiple tasks in diverse domains. It not only highlights its strengths but also rigorously checks for potential biases. However, it falls short in evaluating tasks such as summarization, dialogue generations & long form questions answering. The constraints of automated model free evaluation does not allow evaluation of long form text generation capabilities.

Moving forward, we will delve into additional benchmarks to further extend our understanding of the LLM evaluations.

Have you joined Maxpool? - A Data Science community to discuss real LLM problems!