Better Pull Request Reviews

What top companies do

You don’t get anywhere if you don’t push the code. You don’t get anywhere if you push a code that fucks up. You don’t get anywhere if you push a half-fixed code.

As you keep churning code, you wonder how to maintain the technical quality of a codebase. You learn a few things here and there - SOLID, CI/CD and writing test, but is there anything more to this?

What can be a better way to think about this problem? Should I think on a higher level (abstraction) or should I think on a lower level (details)?

I am putting up a few ideas worth pursuing.

Taking the adage - “If you can't measure it, you can't improve it.” - we need to define a measure of the quality of the codebase. It requires an understanding of the evolution of codebases and the properties which make one good. I am no expert on this topic but some items which can be done are

Static code base metrics

test coverage ⬆️

avg lines per file ⬇️

no. of #ToDo ⬇️

Pull request metrics

time to merge ⬇️

no. of reviews done ⬇️

no. of comments ⬇️

test coverage change ⬆️

unit/integration/performance tests added ⬆️

Writing better code is a matter of skill x time x feedback. If you have skill but no time, you still end up with a mediocre acceptable code base at the best. It is impossible to buy time for doing PRs unless you are in a very senior role.

If you can do faster reviews, you can save time and give more feedback. This helps others to iterate quickly and yourself to spend time improving your own work. But how to do quick PR reviews?

Common

Everyone should be onboarded with the coding standards - often different people have a different style of writing at first. You always meet a colleague who brings camelCase out of nowhere in a Python codebase.

Add linting to increase the readability of the code

Author

Make PRs small for god sake

Avoid - I will fix this later mindset

Deploy first, refactor later. Merge when acceptable, not when perfect.

Don’t feel angry about the review comments

Do a self-review before pushing bad changes or forgotten changes

Did I add all documentation?

Did I add all type-hints?

Did I add all tests?

Did I add what was in the scope?

Did I add what was not in the scope?

Reviewer

Check the title of PR and associated task(Jira/Linear) to understand the scope

Review the file with the biggest change to understand the theme. Some also like to start with the tests modified

Go through the changes in a logical sequence

Fix up reminders and book time on the calendar for everyone to review

If the PR is big, leave some comments and request the author to break into multiple CL(changelist)

Non-critical or tasteful changes can be “nit:” comments and so they can be neglected by the author

Respond fast to comment replies

Last but not least - assign max time till the first review - 1 day is the industry standard. Hold people accountable if they fail at this.

Do commend the work if you are impressed with any implementation. PRs is not about just finding the flaws

Don’t use negative language

Enforce common tool if you see a new tool

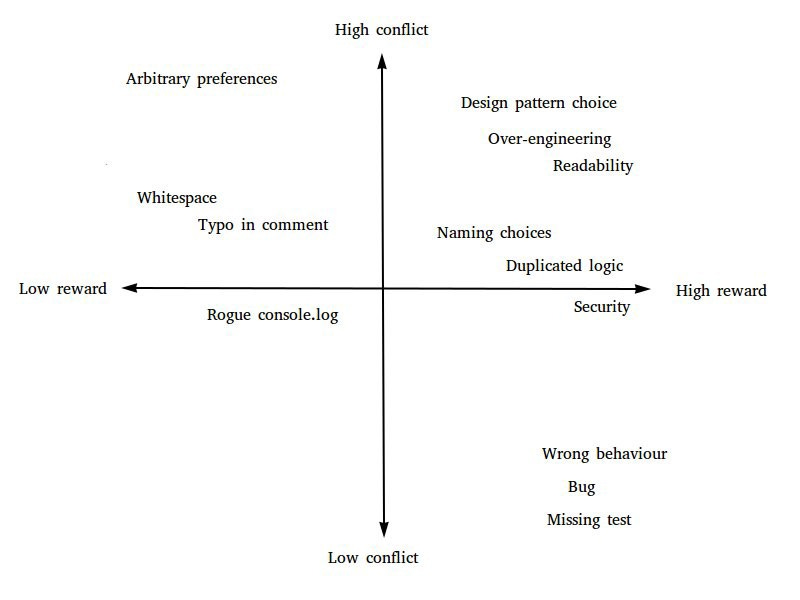

Debate as per the severity

Automation

CI

code linter

import sorter

comment format checker

test runner

PR labeller for visibility

Codeowners setting (Github feature)

CD

deploy to dev/staging/production

slack/mail summaries of deployments

ability to deploy a particular commit for rollbacks

Self pull request review checklist

A lot of comments in PR can be avoided if the author does a self-review themselves. This led to the idea of creating an action that posts a checklist after a PR is created. Once the author checks all the things on the list, it is truly ready for review. This can save a ton of time for everyone.

Missing anything from the checklist is considered to be bad.

Github action yml

| on: | |

| pull_request: | |

| types: [opened] | |

| jobs: | |

| comment: | |

| runs-on: ubuntu-latest | |

| steps: | |

| - uses: actions/github-script@v5 | |

| with: | |

| script: | | |

| github.rest.issues.createComment({ | |

| issue_number: context.issue.number, | |

| owner: context.repo.owner, | |

| repo: context.repo.repo, | |

| body: '**Self PR review checklist** \n- [ ] is not draft \n- [ ] add/remove dependencies \n- [ ] docstrings and type hints \n- [ ] split large func into small funcs \n- [ ] proper variable names \n- [ ] added PR label \n- [ ] optimise modules that takes more than O(n) \n- [ ] moved values to config or shared libs \n- [ ] tested on staging \n- [ ] tests \n - [ ] unit test \n - [ ] integration test \n - [ ] performance test \n - [ ] system test \n' | |

| }) |

Come join Maxpool - A Data Science community to discuss real ML problems!

Connect with me on Medium, Twitter & LinkedIn.

Sources

https://google.github.io/eng-practices/

Awesome insights