LLM Chronicles #4: Adapting OpenAI / Cohere / Sentence Transformer Embeddings For Your Chatbot

Improve generation by improving the retrieval

There is a lot of frenzy around retrieval augment generation via Langchain. To support the retrieval you either keep a pure vector search or full-text search followed by encoder reranking. In both cases, the results are going to be bottlenecked by the dense vector encoder. Users like to use OpenAI / Cohere / Sentence transformer model for the encoding.

Since these models are trained on stale open web data, they might not understand the vocab or context of your closed data and give inferior performance. This requires you to increase the top K or improve the encoder to catch relevant results. Increasing top K is easy but comes with its tradeoffs - larger prompt blows up the LLM cost, reduction in the number of turns you can have in the chat due to max context limit & higher latency. In addition, there is a risk of having unnecessary context in the prompt leading to hallucination. Hence improving the embeddings is a better path depending on the number of users, criticality of performance and long-term plan of the project.

I will lay down few techniques to adapt your embeddings. For simplicity let’s assume we are making a QnA engine.

Data preparation

There are 3 scenarios of data

Just raw text - cold start

Just positive pairs - user signals or queries generated from text

Both positive and negative pairs - user signals or queries generated from text

There are a few patterns to generate positive queries

Rephrase the text

Extract a span from the text

Extract a span from the text & rephrase via GPT

Use GPT to generate a question related to the passage

There are a few patterns to generate negative queries

Extract a span from the text and change the polarity by GPT

Use GPT to generate an unrelated question to the passage

Use queries with some token overlap

Use random queries

In case of generating negatives, there is a chance of generating a potential positive which has to be denoised(remove the noisy data). We have a few options for denoising.

Use an encoder and remove samples which have more than cutoff similarity

Use a cross-encoder and remove samples which have more than cutoff similarity

After applying these steps, you end up with positive and negative pairs for training. Now we have a few options for training.

Since we cannot train the OpenAI / Cohere model, we can only train a network over the embeddings. In the case of sentence transformers, we can choose to train the encoder or keep it frozen. For simplicity, let’s keep it frozen. This will make training similar for all the models.

Training

Now there are 3 ways to train.

Train encoder with pos and neg pairs

Train encoder with triplets of anchor, pos, neg

Train a late interaction model like Colbert

Train encoder with pos and neg pairs

We take the Quora duplicate question dataset which already has pos and neg samples. This is not a question answering dataset but methodology remains similar.

We train a dual tower model also known as siamese network. The model learns to output embedding as per our need. This is an old well known approach in ML community.

from datasets import load_dataset

from sklearn.model_selection import train_test_split

# download data

seed = 0

dataset = load_dataset("quora", split="train")

sampled_dataset = dataset.shuffle(seed=seed).select(range(400000))

# convert to dataframe

data = []

for d in tqdm(sampled_dataset):

data.append((d["questions"]["text"][0], d["questions"]["text"][1], int(d["is_duplicate"])))

df = pd.DataFrame(data, columns=["sentence1", "sentence2", "label"])

# select only 1 lakh pos and neg samples to balance labels

n = 100000

df = df.groupby("label").apply(lambda x: x.sample(n, random_state=42)).reset_index(drop=True).sample(frac=1, random_state=42)

# input processing required for e5 models

df.sentence1 = df.sentence1.apply(lambda x: "query: "+ x)

df.sentence2 = df.sentence2.apply(lambda x: "query: "+ x)

# split into train & test

train_size = 0.8

train, test = train_test_split(df, train_size=train_size, random_state=seed)Define embedding model. We are going with e5-small as it has highest MTEB benchmark for its size. It also has lesser emb size of 384 which leads to faster vector search and lower vector DB cost.

from langchain.embeddings import HuggingFaceEmbeddings

model_name = 'intfloat/e5-small'

encoder = HuggingFaceEmbeddings(model_name=model_name)You can also select OpenAI or Cohere.

from langchain.embeddings import CohereEmbeddings

cohere = CohereEmbeddings(model="medium", cohere_api_key="my-api-key")

from langchain.embeddings import OpenAIEmbeddings

openai = OpenAIEmbeddings(openai_api_key="my-api-key")

# More options here - https://python.langchain.com/en/latest/reference/modules/embeddings.htmlDefine a 2 layer siamese head model

import torch

from torch import nn

# train a siamese network which takes two sentences as input and outputs a similarity score. Encoder is frozen. Encode dim is 384.

# encodings1 -> dropout - > dense1 -> dropout -> dense2 -> updated encodings1

# encodings2 -> dropout - > dense1 -> dropout -> dense2 -> updated encodings2

# cosine similarity between updated encodings

# loss = MSE(label - cosine similarity)

device = "cuda"

class Model(torch.nn.Module):

def __init__(self, encoder, dim=dim, dropout=0.2):

super(Model, self).__init__()

self.encoder = encoder

self.dropout = torch.nn.Dropout(dropout)

self.dense1 = torch.nn.Linear(dim, dim)

self.dense2 = torch.nn.Linear(dim, dim)

self.cosine = torch.nn.CosineSimilarity(dim=1)

def block(self, sentences):

encodings = self.encoder.embed_documents(sentences, normalize_embeddings=True)

encodings = torch.tensor(encodings).to(device)

encodings = self.dropout(encodings).to(device)

encodings = self.dense1(encodings)

encodings = self.dropout(encodings)

encodings = self.dense2(encodings)

return encodings

def forward(self, sentences1, sentences2):

encodings1 = self.block(sentences1)

encodings2 = self.block(sentences2)

sim = self.cosine(encodings1, encodings2).cpu()

return simTrain the model

import matplotlib.pyplot as plt

from IPython.display import clear_output

BATCH_SIZE = 256

train_losses = []

test_losses = []

model = Model(encoder, dim)

model.to(device)

optimizer = torch.optim.Adam(model.parameters(), lr=1e-4)

loss = nn.MSELoss()

for epoch in range(0,5):

epoch += 1

print("Epoch: ", epoch)

epoch_train_losses = []

for i in tqdm(range(0, len(train), BATCH_SIZE), total=len(train)//BATCH_SIZE):

#training

batch = train.iloc[i:i+BATCH_SIZE]

sentences1 = batch.sentence1.tolist()

sentences2 = batch.sentence2.tolist()

labels = torch.tensor(batch.label.values).type(torch.FloatTensor)

sim = model(sentences1, sentences2)

loss = torch.nn.functional.mse_loss(sim, labels)

epoch_train_losses.append(loss.item())

loss.backward()

optimizer.step()

optimizer.zero_grad()

train_loss = torch.tensor(epoch_train_losses).mean()

train_losses.append(train_loss)

print("Train loss: ", train_loss)

# evaluation

with torch.no_grad():

sentences1 = test.sentence1.tolist()

sentences2 = test.sentence2.tolist()

labels = torch.tensor(test.label.values).type(torch.FloatTensor)

sim = model(sentences1, sentences2)

loss = torch.nn.functional.mse_loss(sim, labels)

test_losses.append(loss)

print("Test loss: ", loss.item())

clear_output()

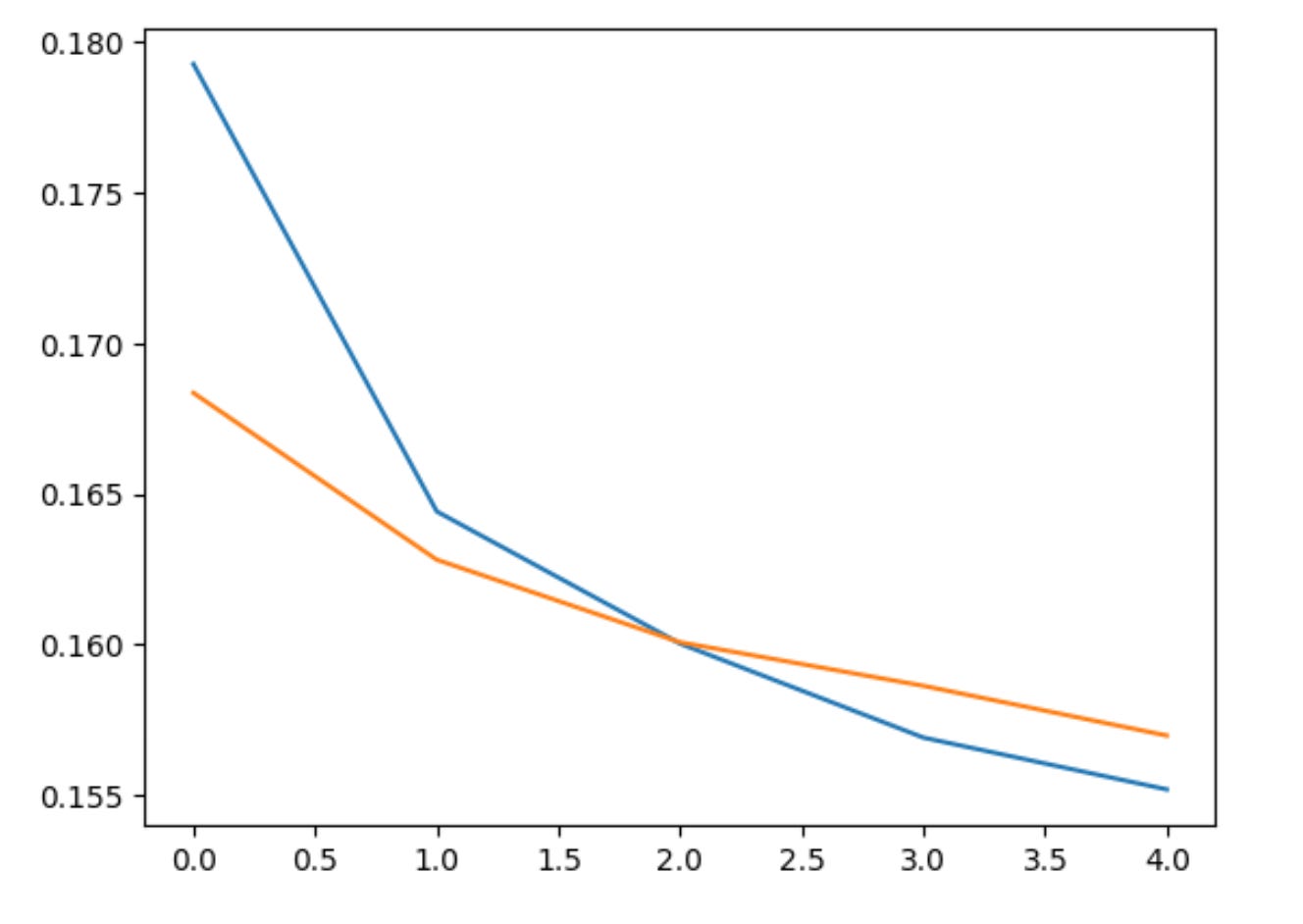

plt.plot(range(epoch), train_losses)

plt.plot(range(epoch), test_losses)

plt.show()Performance of trained e5-small

Performance of untrained e5-small

For same precision of 80, recall has gone up from 70 to 77 for positive labels. This means the encoder is now better at finding duplicate questions. We have not done any augmentations, exhaustive parameter tuning nor ran till best epoch. A 7 point jump is considered well in terms of improvement.

You can try the notebook for more experimentation.

Come join Maxpool - A Data Science community to discuss real ML problems!